Parsing Amazon CloudTrail JSON Logs with a Customized Logstash Build

In November 2013, Amazon released CloudTrail, a web service that logs Amazon Web Services (AWS) requests to store in an S3 bucket. Here’s how we made it work with Logstash – originally with version 1.2.3 and later with the no-longer current development branch, Logstash 1.4.0.

This post was updated on May 2nd, 2016 but should be considered historical. Please see the note on the updated version below. The information here is still valid in terms of the old Logstash 1.4.0 branch, but after two years, there are surely better ways to handle CloudTrail input. (Whether they actually work is another matter.)

Having logs is one thing. Finding a way to make them useful is another. On Medidata’s DevTools team, we wanted a free, open source log analysis tool to enhance our flexibility and control. We chose Logstash because of its active development community and its many appropriate filters. There’s a good comparison of other options here.

CloudTrail saves its log files as gzipped JSON files representing a five minute chunk of time. Since Logstash can handle S3 downloading, gzip decompression, and JSON parsing, we expected CloudTrail parsing to be a piece of cake.

Cake it is not. Out there in Internet-land, people are searching for answers.

Ultimately, we made a custom jar build. This post demonstrates how and why. If that sounds like homework, just download the build and adjust the configuration file with your details.

- logstash 1.4.08 Medidata dev flat jar – md5: 732c311e5d087dd2b86968370d27bc99 – updated 5/2/2016 with newer Excon and Fog builds supporting v4 authentication.

- s3fog_dummy.conf – md5: 8756109c0b915fa90e17ea175894dcb2 … This file’s also shown in its entirety at the bottom of this post. Updated Ruby filter code on 3/19/2014; removed region_endpoint config variable 5/2/2016.

The Four Headaches of Logstash and CloudTrail

-

Logstash wouldn’t connect to S3. AWS command-line tools were working, and Ruby scripts using the Fog gem were working; only Logstash failed to connect.

-

Once we had S3 access working, Logstash took only the first event of the thousands in each log file. Logstash’s JSON parser was incapable of handling arrays of events. So, the arrays needed to be split.

-

Once split, Logstash would not permit further filtering of the events. It was necessary to modify Logstash to re-process the newly spawned events to get accurate time data.

-

Lastly, ElasticSearch, which handles storage and querying, would not allow mixed data types and would thus sometimes choke on the “responseElements” field. The safest bet was to convert this field to a String.

First, An Alternative Solution

Fellow logstash-users Google groups contributor Geoff Owen approached the CloudTrail problem so:

I used s3cmd to grab the files, and then used [jq, a command line JSON parser] to break out the JSON to single lines, that I then pushed to a log file for logstash to pick up.

The jq solution sounds viable, but why build a Rube Goldberg contraption outside of Logstash when you can build that contraption inside it?

Connecting to S3

The first problem was a failure to connect to S3. (If you are not having this problem, skip this part and change “s3fog” to “s3” in the sample configuration, or, better yet, use the one you already wrote.)

Here’s the error output as it appears in the last Logstash release (1.3.3):

A plugin had an unrecoverable error. Will restart this plugin.

Plugin: <LogStash::Inputs::S3 type=>"json", bucket=>"hyacinth", credentials=>["/foo/bar/my_credentials_file"], region=>"us-east-1", region_endpoint=>"us-east-1", prefix=>"logs/">

Error: execution expired

Exception: Timeout::Error

Stack: org/jruby/ext/socket/RubyTCPSocket.java:111:in `initialize'

org/jruby/RubyIO.java:1178:in `open'

jar:file:/Users/ben/logstash_dev/logstash/logstash-1.3.3-flatjar.jar!/META-INF/jruby.home/lib/ruby/1.9/net/http.rb:762:in `connect'

org/jruby/ext/timeout/Timeout.java:127:in `timeout'

...

And so on. (Let’s skip the complete stack trace, which was basically Finnegan’s Wake.)

We tried numerous different configuration methods to connect to our S3 “hyacinth” bucket, double checked all credentials, and always arrived at the same alleged timeout. It’s not clear if this was a network configuration issue of some kind, a troubled JRuby library, or something else. We had no S3 problems with other Ruby apps using Fog, a separate cloud services library, so the solution was to write an input filter using Fog. It is called “s3fog” and the plugin source can be viewed here.

S3fog is configured exactly like Logstash’s own s3 plugin. Bear in mind, it will probably never be incorporated into future Logstash releases.

Splitting the JSON Arrays

The JSON is one single hash with the key “Records” pointing to a hash with the single key “message” containing an array where each element represents one API event. Logstash was now receiving these files, but only reporting one event from each file. As the source code sayeth, in Split.rb 37.1:

# If for some reason the field is an array of values, take the first only.

original_value = original_value.first if original_value.is_a?(Array)

That’s where the next patch comes in: changing the split filter to handle arrays in JSON. The change can be seen in Logstash pull request 787, although the same changes exist in pull request 793 (see below), so if you’re following along at home, don’t merge 787 just yet.

This change adds a “reuse_element” configuration option which determines the structure of the results. In either case the filter yields a set of results into the pipeline. If “reuse_element” is true, the array element within the hash is overwritten with the value of one of its elements. E.g. “Records” => [1,2,3,4] will lead to 4 different events, each one with “Records” => 1, and so on. This is useful if “Records” has sibling values we do not wish to discard; they wind up cloned in each new event. But that’s for other people. We CloudTrail users want “reuse_element” set to false, because “Records” has no siblings. Each individual array element gets returned into the results as a single new event.

At time of writing, Logstash intends to incorporate the split filter change, so you can expect to find it in an upcoming Logstash release. Configuration changes may still be necessary.

Refiltering the Newly Created Events

Logstash has a bug: events spawned by filters do not get passed back into the pipeline to be filtered again. This poses a problem for us, because we never had a chance to point Logstash at the correct timestamp. Logstash was processing data into discrete events, but assigning the current time to every event. Obviously that’s of limited value.

The change in pull request 793 adds a “refilter” option which allows us to reinsert new events into the pipeline for further processing. Tags and conditionals in the configuration can be used to create a sensible flow while preventing infinite loops. Apply the date filter to the new events, and now you’re in business.

With those code changes, you can build the Logstash jars (or download ours, as seen above), and you’re almost done. To make the jar file:

rm -rf build ; make flatjar

If you encounter troubles with the build, change the Makefile so that QUIET= (instead of QUIET=@) and try again– now you can see what’s actually going. If you’re seeing excon gem problems, update the Gemfiles to ensure they use excon 0.28.0 instead of 0.25.3. If the build chokes on other gems, try installing them manually with gem install whatever_gem --version=whichever.

Sporadic “ResponseElements” Failures (see update below)

Certain “responseElements” log values– nulls or hashes–would choke ElasticSearch, if the class was not a String. The solution? Convert all values to a string. This results in some structural loss to the data, but no loss to the data itself. So, the configuration incorporates this little hack using Ruby’s inspect method, which returns a String representation of any class instance:

ruby {

code => "event['responseElements'] = event['responseElements'].inspect"

}

Update for 3/19/2014

It seems that “responseElements” and “requestParameters” can both cause problems, so here’s an update to the ruby code block:

ruby {

'code' => '["responseElements","requestParameters"].each { |field| event[field] = event[field].inspect } '

}

Update for 3/24/2014

This update fixes a problem in the s3fog plugin which was incorrectly updating the sincedb time to “now” before reaching the end of the new files. This posed no problem under stable circumstances but caused data gaps if logstash crashed while indexing. No configuration changes are necessary.

Update for 5/2/2016

After a couple years of steady use of the 1.4.07 build, we were forced to release a small update (linked above as 1.4.08) to handle V4 authentication in the Fog gem, required by S3 buckets in European regions, and remove the validation in the S3/S3fog plugins that enforced a now-incomplete set of regions (notably excluding eu-central-1). You shouldn’t need the update if you’re only using us-east-1. Our latest version is still forked off the now-ancient Logstash 1.40 branch.

Newer versions of Logstash include a Cloudtrail input plugin for Logstash, and the other issues we found in 2014 are supposedly resolved. The plugin didn’t seem to work immediately for us, however, and we’ve had limited time for experimenting with it on our team, so we stuck with our working fork. At this point, we’re providing the updated JAR primarily as a last hope for anyone experiencing trouble, but we strongly encourage users to experiment first with newer versions of Logstash. We will be abandoning this separate branch as soon as there’s time to investigate a more modern configuration and ensure the processing is successful.

One other small note: this update removes the mandatory region_endpoint configuration variable in the S3fog plugin; “region” will be sufficient. (It also removes the Twitter output plugin due to some dependency troubles in compilation; it’s doubtful that will affect anyone still using this forked branch.)

And Now The Full Configuration

The configuration’s input section looks exactly like an s3 plugin section, but uses our replacement input filter. The output section requires no changes under discussion here, but the stdout debugging mode will help you scrutinize the results until you’re confident it’s working.

The filter section takes advantage of Logstash’s conditional filter handling, tagging, and our “refilter” option to create a two-step process. On first pass, “splitted” is not in the tags, so the configuration pushes it into the JSON filter. The parser takes the “message” field from the input hash. That contains the entirety of our event data, filed under “Records.” So, the “split” filter takes the “Records” field and splits it into multiple events. The “refilter” option tells Logstash to process the newly spawned events. To avoid going through the JSON parser again, we add the “splitted” tag.

On the second pass, the “splitted” tag is there, so our data moves on to the second set of filters. The “date” filter takes the event date from the “eventTime” field and parses it via ISO8601 format. The “ruby” filter converts “responseElements” into a String. And that’s it—boom!

input {

s3fog {

'bucket' => 'hyacinth'

'credentials' => ['YOUR_AWS_ACCESS_KEY_ID','YOUR_AWS_SECRET_ACCESS_KEY']

'delete' => false

'interval' => '60'

'prefix' => 'logs/'

'region' => 'us-east-1'

'type' => 'json'

}

}

filter {

if !("splitted" in [tags]) {

json {

'source' => 'message'

}

split {

'add_tag' => ['splitted']

'field' => 'Records'

'refilter' => true

'reuse_element' => false

}

}

if ("splitted" in [tags]) {

date {

'match' => ['eventTime', 'ISO8601']

'remove_tag' => ['splitted']

}

ruby {

'code' => '["responseElements","requestParameters"].each { |field| event[field] = event[field].inspect } '

}

}

}

output {

stdout {

debug => true

codec => rubydebug

}

elasticsearch { embedded => true }

}

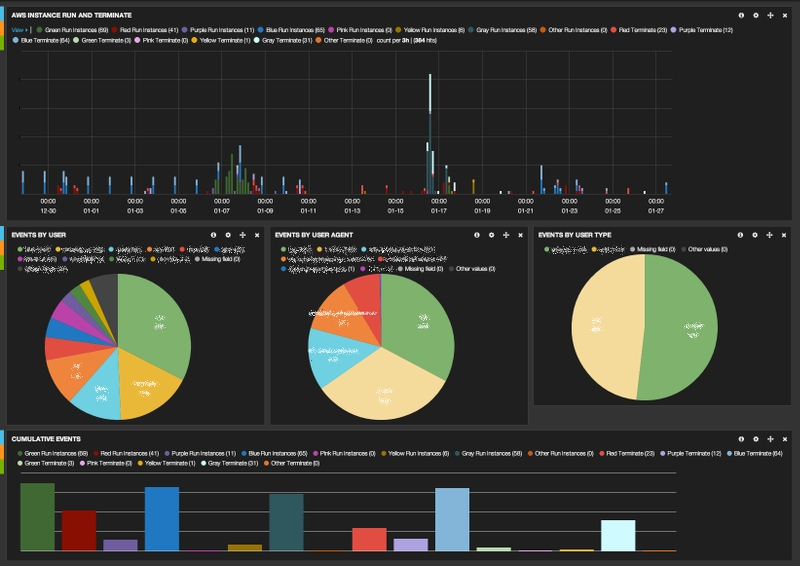

Now start the Logstash agent and web server, open up a browser to Kibana, and watch your beautiful event data flow like wine!